Legal AI tools have matured rapidly. Document summarization, drafting assistance, semantic search, and classification are now widely available and increasingly polished. In demos, many platforms appear capable of transforming legal work overnight.

Yet inside corporate IP teams, adoption tells a different story.

Despite strong interest and growing budgets, many legal AI tools fail to move beyond pilots or narrow use cases. They generate initial excitement, then quietly fade from daily workflows. The problem is rarely that AI is inaccurate or immature. More often, it is that most legal AI tools are built for generic legal workflows, not for the complexity, risk, and decision density of enterprise intellectual property.

Industry surveys support this pattern. A 2025 study of corporate legal departments found that while AI adoption is accelerating, many teams struggle to operationalize tools beyond early pilots due to trust, integration, and workflow alignment challenges—particularly in complex legal domains.

For in-house IP counsel and Heads of IP evaluating legal AI tools, understanding this gap is essential.

The difference between success and failure lies not in model size or feature lists, but in whether AI is designed for how corporate IP actually works.

The Demo-to-Reality Gap in Legal AI Tools

Legal AI tools are typically demonstrated in controlled scenarios: a clean patent document, a limited dataset, a single task. In these settings, performance looks impressive.

Real corporate IP work is far messier.

A single IP decision may involve:

- Prior art across jurisdictions and technical domains

- Prosecution history spanning years

- Portfolio-level positioning and competitive context

- Input from R&D, business leaders, and outside counsel

- Long-term consequences for enforcement, licensing, or freedom to operate (FTO)

Most legal AI tools are optimized for isolated tasks, not for decisions that require integrating all of this context at once. As a result, they perform well in theory but struggle in production environments where nuance, continuity, and accountability matter.

This gap between controlled demonstrations and real-world deployment is widely recognized. Research on AI adoption in legal practice shows that tools often perform well in narrow, testable scenarios, but face significant friction when applied to complex, multi-stakeholder legal workflows with long-term accountability.

This is the core demo-to-reality gap that IP leaders encounter.

Why Corporate IP Breaks the Assumptions Behind Most Legal AI Tools

Most legal AI tools are built on implicit assumptions about how legal work functions: discrete matters, reversible decisions, and limited downstream impact. Senior in-house IP leaders know that corporate IP violates all three.

This is why tools that perform well in general legal contexts often struggle when deployed inside enterprise IP teams.

Surveys of in-house legal leaders echo this mismatch. While corporate counsel broadly recognize AI’s potential to improve efficiency, many report limited confidence in applying current tools to high-stakes legal work, citing readiness, trust, and fit with existing processes as key barriers.

IP decisions compound risk over time

In corporate IP, decisions rarely stand alone. Each filing, amendment, or strategic choice constrains the options available later—often in ways that only become visible years down the line.

- A filing locks in disclosures that competitors can study

- A claim amendment reshapes future enforcement leverage

- A missed invention permanently narrows the portfolio’s strategic surface area

For IP leaders, this creates a compounding risk profile: early decisions quietly shape long-term value, licensing outcomes, and litigation posture. Legal AI tools that optimize individual tasks without understanding these longitudinal effects may improve short-term efficiency while undermining long-term strategy.

Technical judgment is not separable from legal judgment

Senior IP leaders operate at the boundary between legal reasoning and technical reality. In practice, patentability, scope, and risk hinge on whether technical differences are meaningful—not just whether language appears distinct.

This is where many generic legal AI tools fall short. Even when trained on legal text, they often struggle to:

- Evaluate whether a technical distinction actually changes novelty or inventive step

- Reason about structure–function relationships in chemistry or life sciences

- Assess experimental nuance rather than surface-level similarity

Fluent drafting can mask shallow understanding. For IP leaders accountable for portfolio quality, that gap is unacceptable.

Accountability in IP is asymmetric and delayed

Corporate IP decisions are often judged long after they are made—during enforcement, licensing, due diligence, or FTO disputes. At that point, the question is no longer how fast a decision was made, but whether it was defensible.

This is why senior in-house teams demand:

- Clear reasoning behind conclusions

- Evidence trails that can withstand scrutiny

- Consistency with historical positions across the portfolio

Legal AI tools that operate as black boxes may accelerate early work, but they increase organizational exposure later. For leaders responsible for defending IP decisions over time, trust and explainability outweigh raw speed.

Why this matters for AI adoption

These structural realities explain why many legal AI tools fail to scale in corporate IP environments. They are designed around assumptions that don’t hold once decisions become cumulative, technically grounded, and strategically exposed.

The question is not whether AI can assist with IP work, but whether AI is built to operate under the same constraints, accountability, and time horizons as the team itself.

Common Failures of Legal AI Tools in Corporate IP

1. Overreliance on generic large language models

Many legal AI tools rely heavily on general-purpose LLMs with minimal IP-specific reasoning layers. This creates several issues:

- Hallucinated legal conclusions

- Overgeneralized examiner reasoning

- Inconsistent treatment of novelty and inventive step

In corporate IP, even occasional unreliability is unacceptable. Trust, once lost, is rarely regained.

2. Superficial domain adaptation

Labeling a tool “IP-focused” is not the same as engineering it for IP work. True domain adaptation requires:

- Training on patent-specific corpora

- Incorporation of prosecution history and examiner behavior

- Support for chemistry, biotech, and software-specific reasoning

Without this, legal AI tools remain generic at their core, regardless of branding.

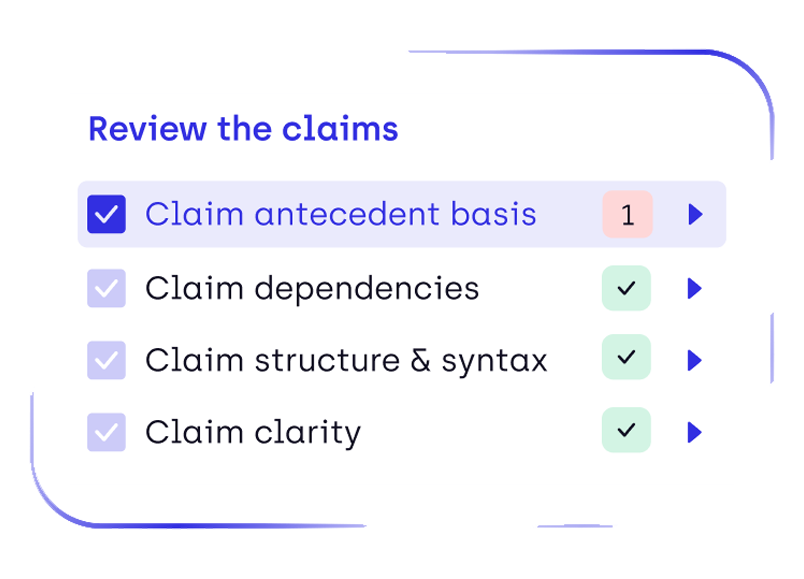

3. Lack of explainability and reviewer alignment

IP professionals do not simply need answers. They need to understand:

- Why a reference is relevant

- Which claim elements are affected

- Where uncertainty or risk remains

Legal AI tools that cannot surface reasoning steps or align outputs with examiner-style logic force users to redo the analysis manually, negating efficiency gains.

4. Workflow fragmentation

Many legal AI tools excel at one function: drafting, searching, reviewing, or summarizing. Used in isolation, they can be helpful. Used at scale, they create fragmentation.

IP teams are forced to:

- Export and re-import data

- Manually reconcile outputs

- Maintain parallel systems of record

Over time, this increases operational risk and reduces adoption.

Why Some Legal AI Tools Struggle at Enterprise Scale

Point solutions promise quick wins, but corporate IP work is inherently interconnected. A patent drafting decision affects prosecution. A prosecution outcome affects portfolio value. A portfolio decision affects business strategy.

Many legal AI tools suffer from context collapse. Each tool operates with a narrow view, unaware of:

- Related patent families

- Historical examiner interactions

- Strategic priorities across the portfolio

As portfolios grow, these blind spots compound. IP leaders find themselves managing tools instead of managing IP strategy.

What Successful Corporate IP AI Deployments Do Differently

Organizations that see real returns from legal AI tools don’t treat AI as a productivity add-on. They deploy it where corporate IP teams actually make decisions, and they hold it to the same standards of accountability as human analysis. The result is not flashier outputs, but tools that earn trust and stay in use.

They embed AI into IP decision workflows

Rather than automating isolated tasks, successful deployments connect AI outputs to the decisions that shape portfolio outcomes. AI supports reasoning across the IP lifecycle, instead of producing one-off answers that must be reinterpreted downstream.

In practice, this means AI contributes across common decision paths, including:

- Invention disclosure triage through filing recommendation

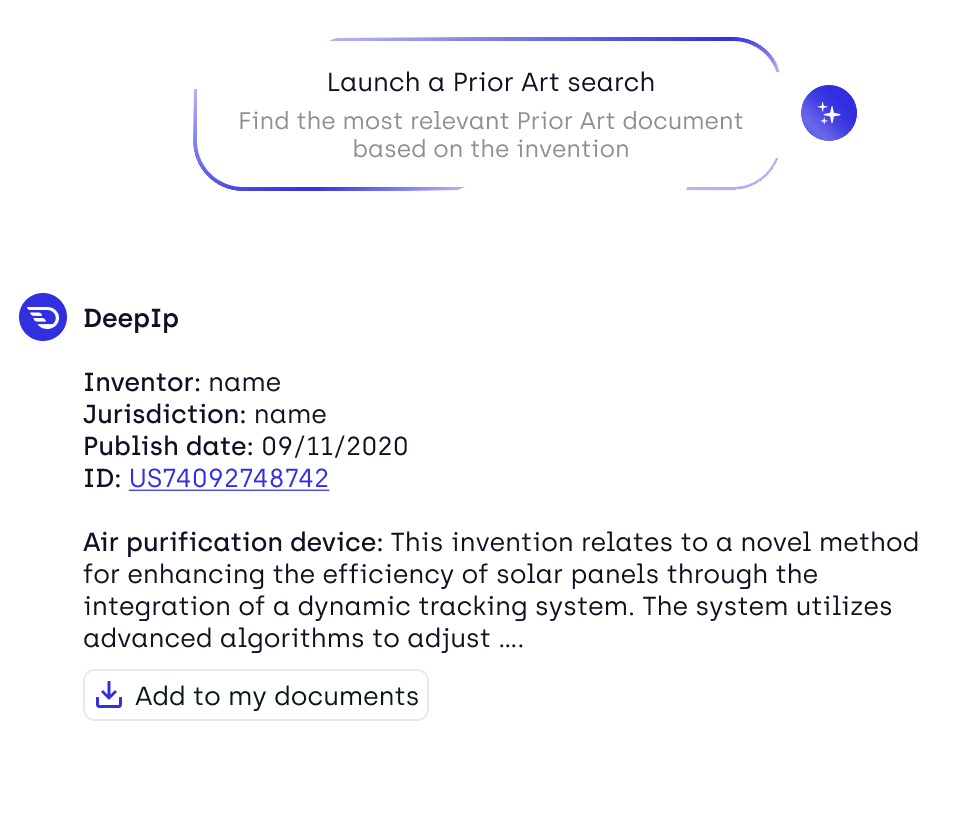

- Prior art analysis linked directly to claim strategy

- Office action review aligned with prior prosecution positions

- Portfolio review tied to investment, pruning, or coverage gaps

Scaling automation this way allows AI to become part of how IP teams decide—not just how they draft.

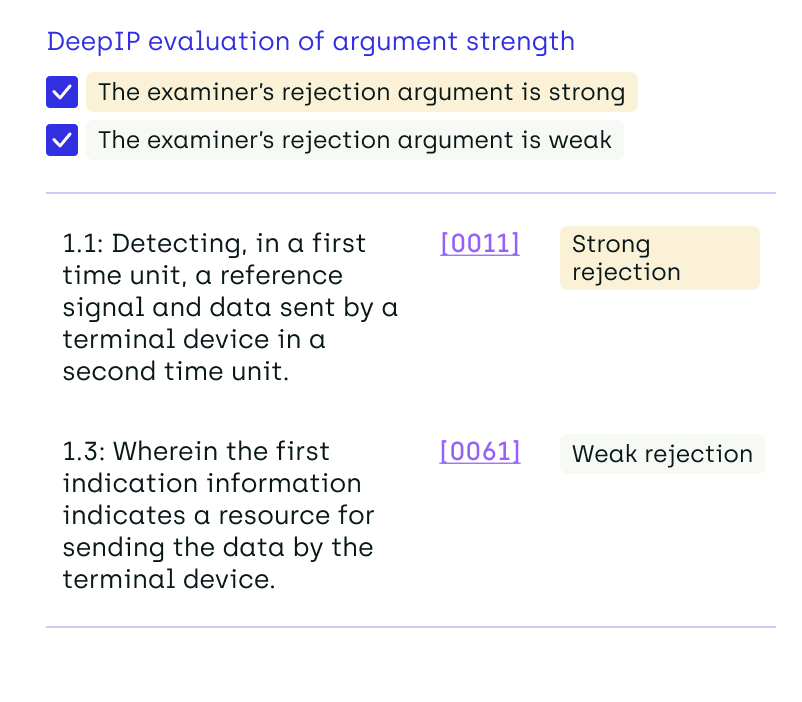

They prioritize explainability over fluency

In corporate IP, well-written text is less valuable than defensible reasoning. Teams adopt AI faster when outputs make it easy to understand why a conclusion was reached and where risk remains.

High-performing tools consistently surface:

- Evidence tied to specific claims, passages, or references

- Clear reasoning steps that reviewers can validate quickly

- Signals around uncertainty, ambiguity, or alternative interpretations

Explainability reduces review time, improves collaboration with outside counsel, and makes AI outputs usable in high-stakes contexts.

They incorporate portfolio and historical context by default

Point tools often analyze patents in isolation. Successful deployments do not. They ensure AI understands how individual matters fit into a broader portfolio and prosecution history.

Effective systems account for:

- Patent family relationships and shared specifications

- Claim evolution and prior amendments

- Earlier office actions and examiner behavior

- Competitive positioning across the portfolio

This context keeps AI outputs aligned with long-term IP strategy rather than short-term convenience.

They build governance into everyday use

AI adoption stalls when tools cannot meet enterprise standards for control and accountability. Successful deployments treat governance as a core capability, not an afterthought.

Industry analysts increasingly point to governance—not model capability—as the limiting factor in enterprise legal AI adoption. Deloitte predicts continued growth in legal AI investment but emphasizes that sustained value depends on aligning tools with enterprise governance, risk management, and long-term legal workflows.

At a minimum, this includes:

- Audit trails and version history

- Role-based access for internal and external users

- Clear data boundaries appropriate for sensitive IP

These safeguards allow AI to scale across teams without increasing risk.

What In-House IP Teams Should Demand from Legal AI Tools

Evaluating legal AI tools requires moving past surface features and asking whether a platform can withstand the realities of corporate IP work. The following criteria help distinguish tools that scale from those that stall after pilots.

1. Domain depth

Is the system designed for patent and IP data from the ground up, or adapted from general legal workflows?

2. Contextual reasoning

Can the AI reason across prior art, prosecution history, and portfolio context, or does it treat each task in isolation?

3. Explainability

Are conclusions traceable to evidence and logic that a human reviewer can assess and defend?

4. Workflow integration

Does the tool reduce fragmentation across drafting, review, and prosecution—or add another point solution to manage?

5. Enterprise readiness

Can the platform support data security, governance, and scale across large portfolios and multiple stakeholders?

The Future of Legal AI Tools in Corporate IP

The next phase of legal AI will not be defined by larger models or more polished interfaces. It will be defined by contextual, agentic intelligence—systems that understand how IP decisions are made, why they matter, and what is at stake.

Across the legal industry, consensus is emerging that the next phase of AI adoption will be defined less by model capability and more by contextual intelligence—tools that integrate domain expertise, historical data, and governance into decision-making workflows rather than operating as standalone assistants.

For corporate IP teams, this represents a clear opportunity. By moving beyond generic legal AI tools and demanding platforms designed for IP complexity, organizations can achieve meaningful efficiency gains without sacrificing quality, control, or strategic alignment.

.png)

.png)