The Hidden Friction in Office Action Responses

Office action (OA) response collaboration sit at one of the most operationally dense points in the patent lifecycle. OA responses require legal interpretation, technical judgment, strategic tradeoffs, and strict timing discipline—often under intense cost and workload pressure.

Yet despite their importance, OA responses remain one of the least standardized workflows in patent prosecution.

Across many organizations, the process still looks like this: An examiner issues an office action. Outside counsel prepares a draft. Internal teams review under time pressure. Comments are exchanged over email. Decisions are revisited late in the process. Strategic intent is inferred rather than documented.

This approach is not broken because of lack of expertise. It fails because collaboration itself is unstructured.

As patent portfolios scale, technologies become more complex, and AI-assisted prosecution accelerates drafting speed, the risks of fragmented collaboration compound. Inconsistent responses, misaligned arguments, and missed strategic opportunities become systemic—not exceptional.

Standardizing office action response collaboration is now a core prosecution governance issue, not merely an operational one.

Why Office Action Collaboration Breaks Down

The breakdown is rarely caused by a single failure. It emerges from structural mismatches between stakeholders.

Outside counsel optimizes for persuasive legal argumentation within tight billing and time constraints. Internal teams optimize for patent portfolio coherence, long-term claim strategy, and business alignment. Technical stakeholders focus on scientific accuracy and defensibility.

These priorities are complementary—but without a shared framework, they compete rather than reinforce.

Common friction points include:

- Lack of a consistent review lens: Each response is evaluated differently depending on who reviews it and how much time they have.

- Implicit strategy: Claim scope decisions are embedded in edits and redlines, rather than explicitly assessed.

- Asynchronous feedback loops: Comments arrive late, forcing reactive rewrites rather than strategic refinement.

- Overreliance on precedent: Prior responses are reused without reassessing whether examiner reasoning, claim context, or portfolio position has materially changed.

The result is not necessarily poor responses—but uneven ones, where quality depends on individual reviewers rather than institutional process.

The Cost of Inconsistent OA Responses

Inconsistent office action response collaboration creates costs that rarely appear as a single line item. Over time, it leads to:

- Longer patent prosecution timelines due to avoidable examiner pushback

- Increased rounds of amendment that narrow claims prematurely

- Reduced predictability across related families and jurisdictions

- Higher cumulative prosecution spend driven by rework rather than progress

More subtly, it erodes confidence. When responses are not evaluated against a common standard, it becomes difficult to answer basic questions such as:

- Is this argument strong relative to examiner practice?

- Are we conceding too much, too early?

- Would a different response approach reduce future office actions?

Without standardization, these questions are answered inconsistently—or not at all.

Standardization Does Not Mean Rigidity

A common concern is that standardization will flatten nuance or constrain expert judgment. In practice, the opposite is true.

Effective standardization does not prescribe what arguments to make. It standardizes how arguments are evaluated.

The goal is not uniformity of outcome, but consistency of decision-making logic. High-performing patent organizations standardize three layers of the OA response process:

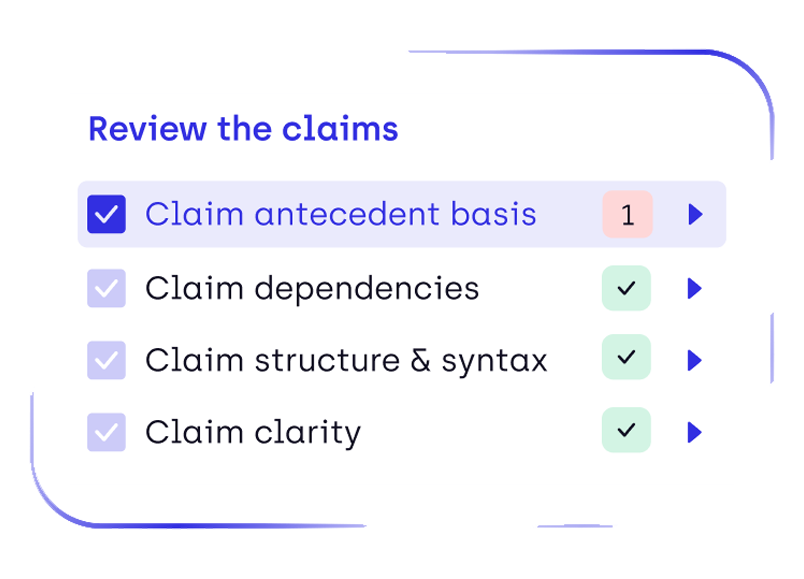

- The evaluation framework

- The collaboration workflow

- The feedback and learning loop

Each layer reinforces the others.

Layer One: A Shared Evaluation Framework

Before collaboration can be standardized, teams need a shared understanding of what “good” looks like. This starts with defining consistent evaluation criteria for OA responses, such as:

- Alignment with examiner reasoning rather than generic rebuttal

- Strength and specificity of novelty and inventive step arguments

- Impact of amendments on long-term claim scope

- Consistency with prior positions across the patent portfolio

- Risk introduced for downstream enforcement or licensing

Critically, these criteria should be applied before edits begin.

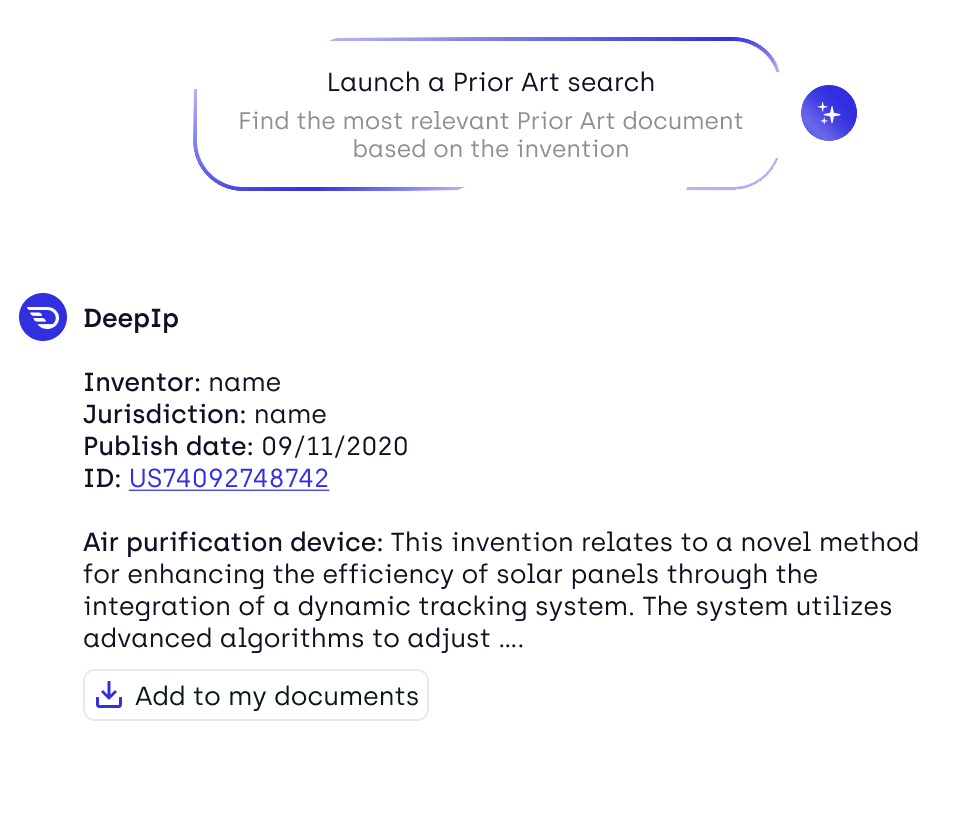

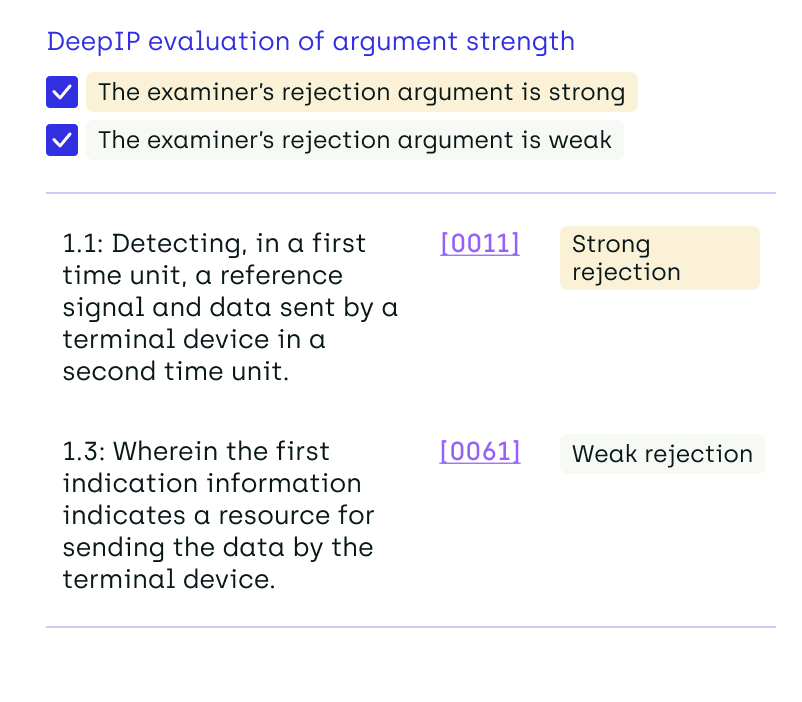

Increasingly, organizations are adopting AI-based examiner-style review tools to operationalize this layer. Instead of relying solely on subjective judgment, responses can be reviewed against simulated examiner reasoning, highlighting weaknesses, overclaims, or logical gaps early.

This transforms review discussions from “Do we like this?” to “Does this withstand scrutiny?”

Platforms such as DeepIP’s AI Reviewer are designed specifically for this purpose, providing a structured, examiner-aligned assessment that both internal teams and outside counsel can reference using the same analytical lens.

Layer Two: Structured Collaboration Workflows

Once evaluation criteria are shared, office action response collaboration itself must be structured.

In many organizations, OA collaboration still happens across disconnected tools: email threads, Word comments, IP management systems, and ad hoc calls. Standardization requires a defined sequence of interaction.

A mature workflow typically includes:

- An initial response draft prepared by outside counsel

- Automated or semi-automated examiner-style review to surface risk areas

- Focused internal review centered on strategic decisions, not line edits

- Consolidated feedback returned through a single channel

- Final validation against the original evaluation framework

This structure does two things. First, it shifts internal review time away from rewriting and toward decision-making. Second, it creates a repeatable rhythm that law firms can align with across matters. The result is faster convergence—not slower process.

Platforms designed for patent prosecution increasingly support this layer by centralizing drafts, feedback, and review stages within a single workflow. DeepIP’s Patent Prosecution module, for example, allows internal teams and outside counsel to collaborate in a shared response environment, reducing reliance on email threads and disconnected tools while maintaining clear review ownership.

Layer Three: Feedback and Institutional Learning

The most overlooked layer is feedback. Most OA responses are treated as isolated events. Once filed, attention moves on to the next deadline. Over time, this prevents organizations from learning systematically.

Standardized collaboration enables feedback loops such as:

- Tracking which argument types consistently overcome examiner objections

- Identifying amendment patterns that lead to faster allowance

- Comparing outcomes across firms, technologies, or examiner groups

- Refining internal guidance based on empirical results

AI-enabled prosecution analytics increasingly make this feasible at scale, allowing organizations to move from anecdotal experience to evidence-based refinement of response strategy. This is where standardization delivers compounding returns.

The Role of AI in Enabling (Not Replacing) Collaboration

AI’s role in OA responses is often misunderstood as patent automation in the drafting stage. In reality, its most immediate value lies in coordination and quality control. AI systems can:

- Review responses against examiner logic before human review

- Highlight inconsistencies across related cases

- Surface strategic tradeoffs introduced by amendments

- Provide a neutral reference point in internal–external discussions

Rather than replacing attorneys, AI reduces the cognitive load of review, allowing experts to focus on judgment rather than detection.

DeepIP’s patent review module is designed to act as a consistent first-pass partner across responses, ensuring that every draft is evaluated against the same standards before collaboration begins. This levels the playing field between different firms, technologies, and reviewers.

Law Firms Benefit from Standardization Too

From the outside counsel perspective, standardized collaboration reduces friction rather than increasing it.

Clear evaluation criteria reduce subjective revision cycles. Structured workflows minimize last-minute surprises. Consistent feedback improves alignment over time.

Law firms that adapt quickly to standardized OA collaboration models often see:

- Fewer revision rounds

- Clearer strategic direction from clients

- More predictable timelines

- Stronger long-term relationships

Standardization is not about control. It’s about clarity.

Moving from Ad Hoc to Operationalized Prosecution

Office action responses are no longer isolated legal tasks. They are repeatable, high-volume decision points that shape portfolio value.

As portfolios grow and AI accelerates drafting speed, collaboration—not generation—becomes the bottleneck.

Organizations that treat OA responses as an operational system rather than a series of one-off events are better positioned to:

- Reduce prosecution timelines

- Improve claim durability

- Control cumulative costs

- Scale without sacrificing quality

Standardizing collaboration across internal teams and law firms is the foundation of that shift.

The technology exists. The frameworks are emerging. The remaining challenge is organizational intent.

.png)

.png)