Artificial Intelligence (AI) is a transformative technology poised to revolutionize various industries, including intellectual property (IP) law. However, AI is often a buzzword that encompasses a broad spectrum of technologies and applications. To harness AI's potential effectively, it's crucial to understand its specifics, the associated risks, and strategies for mitigating those risks. This comprehensive guide, based on a recent webinar, delves into these aspects in detail.

Understanding AI: Beyond the Buzzword

AI comprises various technologies that can perform tasks traditionally requiring human intelligence, such as machine learning, natural language processing, and computer vision. However, the term AI can be as vague as saying "we're using math" without specifying whether it's basic arithmetic or complex calculus. For IP practitioners, specificity matters, much like distinguishing between different types of IP lawyers (e.g., patent attorneys vs. trademark attorneys).

When discussing AI, it’s essential to ask: What specifically is the system providing? How will it be used? Understanding the particular type of AI and its applications ensures clearer expectations and more effective integration.

Identifying and Mitigating Major AI Risks

Integrating AI into professional workflows, especially in fields handling sensitive information like IP law, entails several risks that require careful consideration:

1. Confidentiality: Can sensitive data leak through AI systems?

The landscape has changed significantly. For example, when OpenAI released ChatGPT two years ago, they utilized every user's data to train and improve their models. Today, the ecosystem is much safer, with serious providers offering plans that guarantee a higher level of confidentiality.

Confidentiality remains a paramount concern for patent practitioners. AI introduces new dimensions to these concerns, particularly regarding data storage and access. Key questions to consider include:

- Prompt Storage: How are the prompts stored, and who has access to them?

- Data Reuse: Does the AI provider use your data to improve their models? If so, is it solely for your benefit, or do they share improvements with other users?

- Protection Against Hacking: How does the provider protect their model against hacking techniques that could expose raw training data?

When selecting an AI provider, consider their data processing and retention policies, whether they reuse data for retraining, and their monitoring practices. Providers like Microsoft Azure, for example, offer monitoring exemptions to ensure no unauthorized access. Davinci, thanks to its long-term relationship with Microsoft, is the only AI service provider currently offering this monitoring exemption.

2. Security: Is my data Safe?

Security is the second most critical topic after confidentiality. Ensuring data safety requires asking additional questions, especially with AI, which often involves using cloud services. Over the past decade, 70% of law firms have transitioned their data to the cloud, attracted by its security, disaster recovery, and cost benefits.

However, this shift also introduces risks, particularly concerning data location, encryption during transit, and security once the data reaches the cloud. Key considerations include:

- Data Location: Where is the data stored, and in which geographic regions are the servers located?

- Data Encryption: Is the data encrypted during transit and at rest?

- Preventing Data Leakage: How does the provider ensure that data does not leak, especially between different clients?

Law firms must understand that using the cloud means relying on someone else’s computer infrastructure, which adds layers of risk. Providers like Microsoft Azure are preferred due to their extensive security certifications and infrastructure.

For example, at Davinci, we operate on Azure and offer data storage in both the US and Europe to comply with export control rules. We use both logical and physical separation to safeguard data and ensure encryption.

While it's easy for providers to make promises, it's crucial to have third-party audits and certifications to ensure they deliver on their claims. For instance, at davinci we hold ISO 27001 and SOC 2 Type 2 certifications.

A good practice is to ask your service provider to show you their Trust Center to verify their compliance and certifications.

3. Factuality: Can I rely on AI-generated content?

Once foundational security measures are in place, it's crucial to focus on the factuality of AI-generated content. AI is designed to match patterns, not necessarily to produce truthful information. The risks related to credibility, trust, financial impact, and potential legal complications due to AI inaccuracies are significant.

Two years ago, models like ChatGPT had a 30% hallucination rate, but improvements have reduced this to 3%. Despite this progress, challenges remain due to the inherent nature of AI models and their dependence on high-quality training data.

Partnering with providers who invest significantly in enhancing model accuracy is essential. As an IP professional, remember that while AI tools are highly beneficial, they will never replace human expertise. The AI tool should be viewed as a copilot—you are in control. Implement strict fact-checking protocols before submitting anything and be aware of the model's limitations.

The reliability of the content also heavily depends on the robustness of the service provider. Given the significant variation between providers, partnering with those using the best technologies is essential. At Davinci, we are well-versed in these topics. Our parent company, Kili Technology, specializes in creating and selling high-quality training data sets to major clients like Mistral AI, SAP, and the U.S. Department of Defense. We leverage this expertise to enhance the quality of generated content.

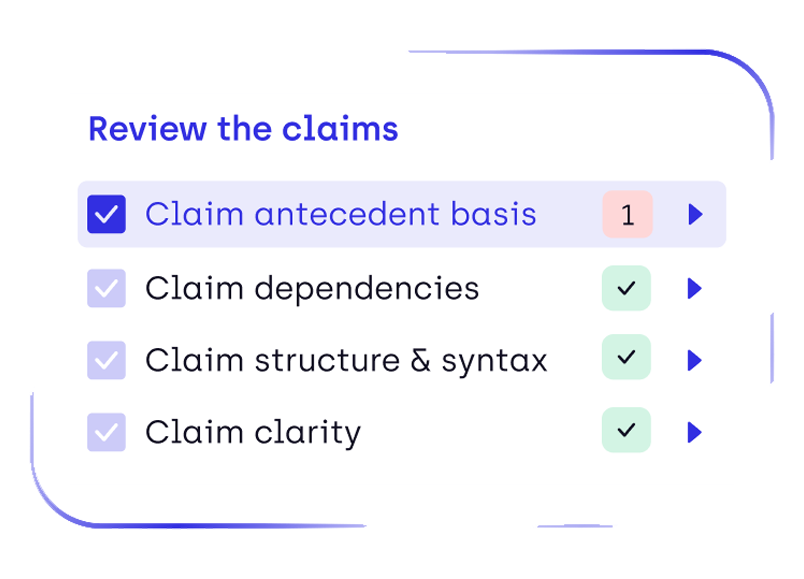

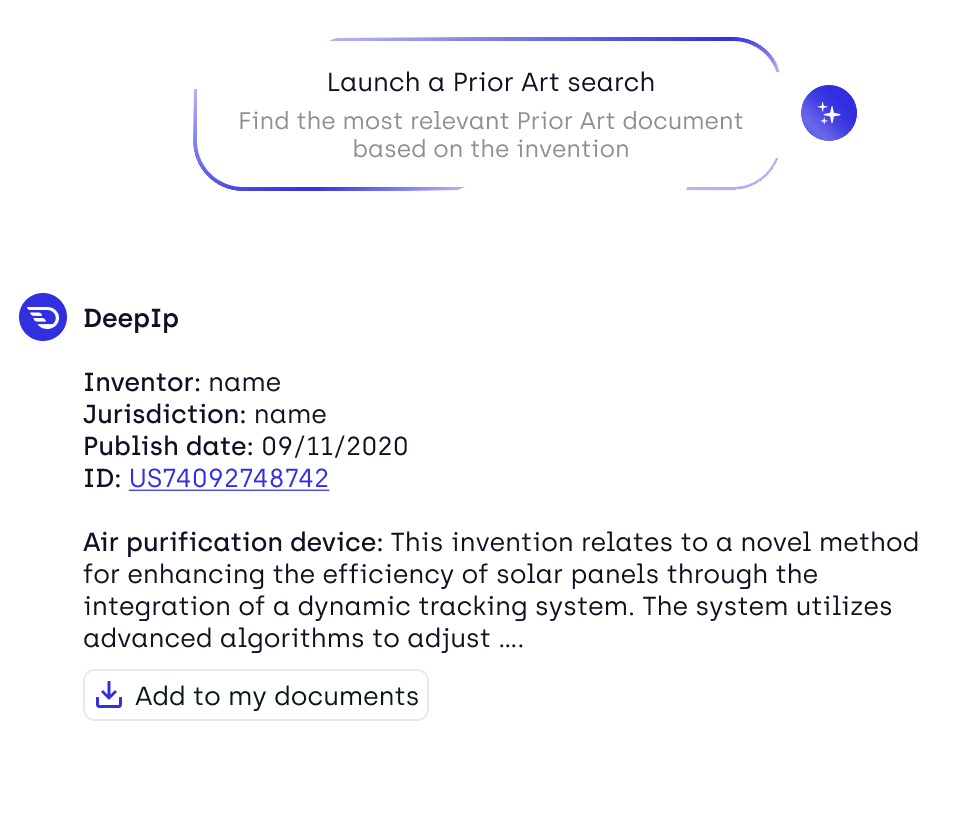

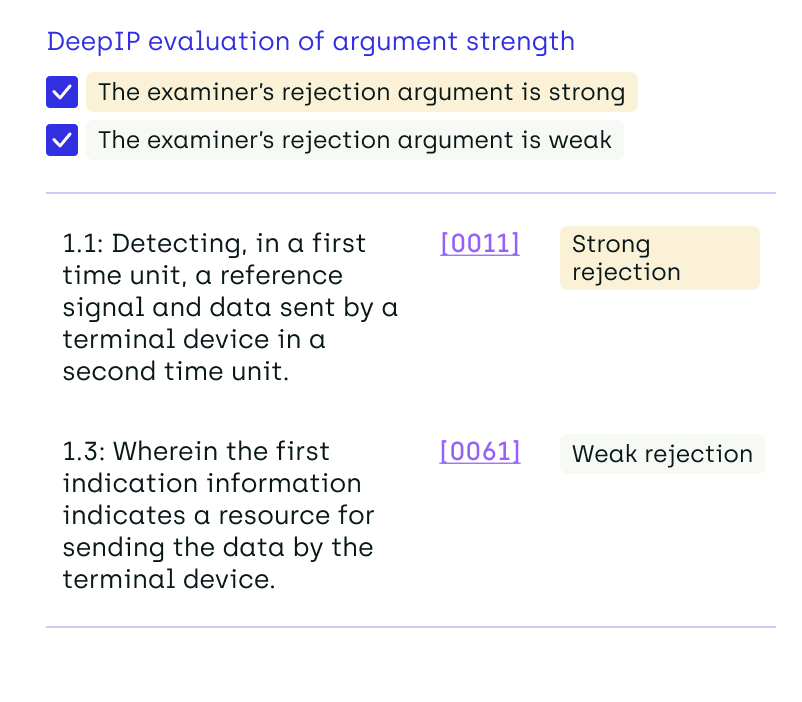

Our models are designed to prevent issues such as the use of patent profanity terms, which are common in generative algorithms. Additionally, we have developed models to assess the level of support for claims by measuring the distance between the model's reformulations and the initial set of claims. This ensures higher accuracy and reliability in the outputs generated by our AI tools.

4. Regulatory Compliance

Regulatory compliance is another critical aspect that patent practitioners must consider. Ensuring that AI providers adhere to evolving regulations and guidelines, such as the USPTO guidance on AI tool usage, is essential.

While these guidelines remain incomplete the rapid efforts by organizations to establish clear directives are promising. When evaluating a vendor, consider asking the following questions:

- Commitment to Compliance: Is the provider dedicated to following current regulations and adapting as they evolve?

- Control and Transparency: Does the practitioner retain control over the AI's output, and can the provider offer proof of compliance?

There are ways to design AI tools that support regulatory requirements, including providing documentation of actions performed by both the machine and the human. Ensuring that your AI provider can demonstrate this capability is crucial for maintaining compliance and accountability.

5. Societal Impact: How AI will change the future of patent practitioners?

The final risk to consider is the societal impact of AI technology. A common fear is that new technology will replace jobs. A managing partner at a law firm might also wonder, "Should I hire more associates, or should I use AI?"

AI tools should be seen as assistants, not replacements. For example, no one would submit a brief or application without running spellcheck; similarly, AI should be used to enhance, not replace, human expertise. Clients will still need attorneys, but they may prefer those who utilize AI effectively.

Patent practitioners should ensure that AI tools enhance critical thinking and expertise rather than fostering over-reliance, which can lead to errors and skill erosion. AI can take on routine tasks, allowing human professionals to focus on complex, high-value work. Adapting workflows to leverage human-machine complementarity is crucial. Simple tasks can be delegated to AI, while complex tasks remain with human experts. This approach requires firms to develop talent and cultivate adaptability to technological changes.

In practice, attorneys already consider the appropriate level of expertise needed for various tasks within a firm. This thought process should extend to AI, recognizing when it's beneficial to bring in AI to handle routine tasks efficiently. By choosing vendors that share this philosophy and integrate AI tools seamlessly into workflows, firms can ensure that AI serves as a valuable assistant.

Conclusion of GenAI in IP Practice

Selecting the right AI service provider is vital for patent practitioners. Understanding and mitigating risks associated with AI, such as confidentiality, security, factuality, regulatory compliance, and societal impact, ensures the safe and effective use of AI in patent practice. Davinci exemplifies good practices, providing robust security measures, high-quality data, regulatory compliance, and a focus on human-machine complementarity.

By asking the right questions and partnering with trustworthy providers, patent practitioners can harness the potential of AI while safeguarding their practice and client data.

For more detailed insights and to explore how davinci can assist your practice, feel free to reach out an try it out by yourself.

.png)